Out-of-the-box AI vs. AI with Fishbrain.

The difference isn't subtle. It's the leap from short-term context to long-term cognition.

Out-of-the-Box AI vs. AI with Fishbrain

14 critical capabilities that define true long-term cognition.

| Capability | Out-of-the-Box LLM | With Fishbrain |

|---|---|---|

| Session Memory | Forgets everything once the chat ends. | Persistent scoped memory across sessions, projects, and personas. |

| Context Coherence | Loses track of details in long or complex conversations. | Maintains continuity and context even across weeks of dialogue. |

| Context Density | Limited by token window size — bloats easily. | Compresses context intelligently — only high-signal facts are injected. |

| Reflection / Learning | Static — must be re-taught from scratch every time. | Reflection Engine converts chats into structured, reusable memories. |

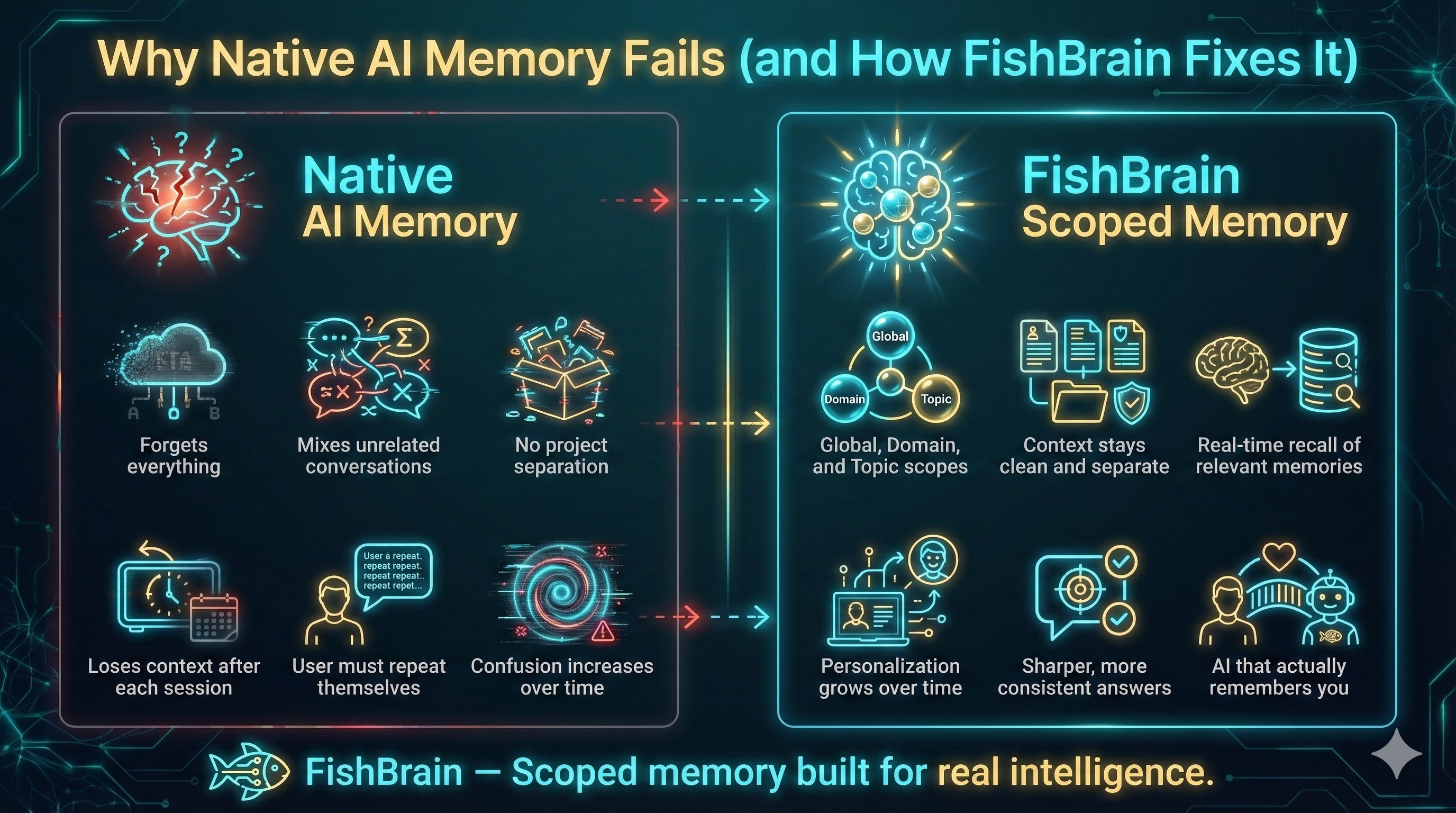

| Project Scoping | One flat memory for everything. | Hierarchical: Global → Domain → Topic — no bleed between contexts. |

| Transparency & Control | Hidden internal memory — can't see or edit what's stored. | Every memory is visible, editable, and exportable — your data, your way. |

| Data Ownership | Controlled by the model provider. | 100% user-owned and portable across models and platforms. |

| Model Lock-In | Bound to one vendor's memory implementation. | Model-agnostic: works with GPT, Claude, Gemini, Grok, and more. |

| Personalization | Implicit and opaque — no control over what the model remembers. | Explicit, scoped, opt-in personalization that respects your intent. |

| Multimodal Recall | Text-only and temporary. | Designed for text, images, and file metadata — persistent multimodal memory. |

| Pause / Resume Thinking | Interruptions destroy context — must restart generation. | Pause-Resume Engine lets AI freeze mid-thought and pick up seamlessly later. |

| Memory Hygiene | None — duplicates, noise, and outdated info build up. | Automatic reflection, deduplication, and scoring keep memory sharp. |

| Context Awareness | Treats every request as isolated. | Understands history, goals, and prior decisions within the same scope. |

| API Extensibility | Closed — no access to memory or embeddings. | Open API for scoped search, reflection, and injection — plug into anything. |

| Result | 💤 Short-term assistant. | ⚡ Long-term cognitive partner. |

Forgets everything once the chat ends.

Persistent scoped memory across sessions, projects, and personas.

Loses track of details in long or complex conversations.

Maintains continuity and context even across weeks of dialogue.

Limited by token window size — bloats easily.

Compresses context intelligently — only high-signal facts are injected.

Static — must be re-taught from scratch every time.

Reflection Engine converts chats into structured, reusable memories.

One flat memory for everything.

Hierarchical: Global → Domain → Topic — no bleed between contexts.

Hidden internal memory — can't see or edit what's stored.

Every memory is visible, editable, and exportable — your data, your way.

Controlled by the model provider.

100% user-owned and portable across models and platforms.

Bound to one vendor's memory implementation.

Model-agnostic: works with GPT, Claude, Gemini, Grok, and more.

Implicit and opaque — no control over what the model remembers.

Explicit, scoped, opt-in personalization that respects your intent.

Text-only and temporary.

Designed for text, images, and file metadata — persistent multimodal memory.

Interruptions destroy context — must restart generation.

Pause-Resume Engine lets AI freeze mid-thought and pick up seamlessly later.

None — duplicates, noise, and outdated info build up.

Automatic reflection, deduplication, and scoring keep memory sharp.

Treats every request as isolated.

Understands history, goals, and prior decisions within the same scope.

Closed — no access to memory or embeddings.

Open API for scoped search, reflection, and injection — plug into anything.

💫 Coherence Through Context Density

Standard models forget, ramble, and repeat because they work with limited token windows. Fishbrain uses semantic injection and reflection to keep responses tight, coherent, and context-aware — no matter how long or detailed the conversation becomes.

"It's basically the difference between an AI that remembers you… and one that knows you."

Don't settle for memory-loss AI.

Experience the leap from short-term assistant to long-term cognitive partner.

Want to dive deeper?